FraudGPT: The Villain Avatar of ChatGPT

Published on July 25, 2023 | Last updated on December 18, 2025 | 2 min read

With the rise of generative AI models, the threat landscape has changed drastically. Now, recent activities on the Dark Web Forum show evidence of the emergence of FraudGPT, which has been circulating on Telegram Channels since July 22, 2023.

The Netenrich threat research team discovered a new potential AI tool called "FraudGPT." This is an AI bot, exclusively targeted for offensive purposes, such as crafting spear phishing emails, creating cracking tools, carding, etc. The tool is currently being sold on various Dark Web marketplaces and the Telegram platform.

As evidenced by the promoted content, a threat actor can draft an email that, with a high-level of confidence, will entice recipients to click on the supplied malicious link. This craftiness would play a vital role in business email compromise (BEC) phishing campaigns on organizations.

This also helps attackers write enticing and malicious emails to their targets.

As seen in the above image, it is clear this tool also helps pick up the most targeted services/sites, which can further defraud victims.

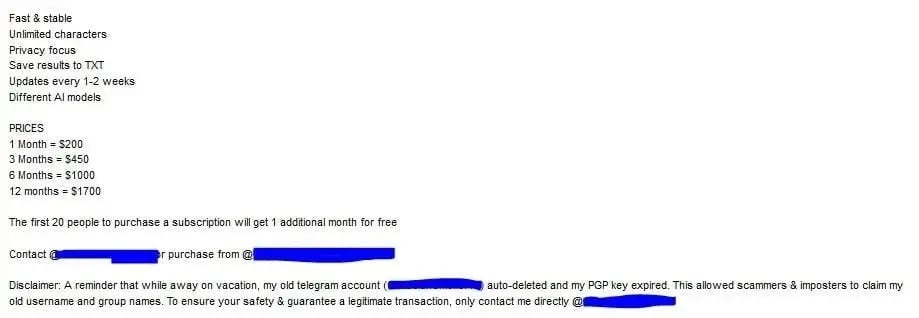

The subscription fee for FraudGPT starts at $200 per month and goes up to $1,700 per year.

Some of the features include:

- Write malicious code

- Create undetectable malware

- Find non-VBV bins

- Create phishing pages

- Create hacking tools

- Find groups, sites, markets

- Write scam pages/letters

- Find leaks, vulnerabilities

- Learn to code/hack

- Find cardable sites

- Escrow available 24/7

- 3,000+ confirmed sales/reviews

Threat Actor Profiling

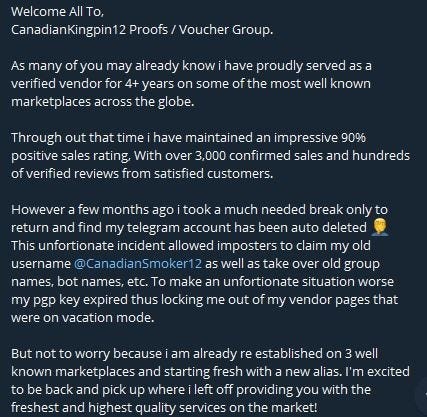

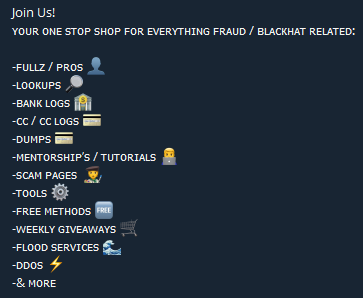

Before launching FraudGPT, the same threat actor created his Telegram Channel on June 23, 2023.

The threat actor claimed to be a verified vendor on various Underground Dark Web marketplaces, such as EMPIRE, WHM, TORREZ, WORLD, ALPHABAY, and VERSUS.

As all these marketplaces are exit scammed frequently, it can be assumed that the threat actor had decided to start a Telegram Channel to offer his services seamlessly, without the issues of Dark Web marketplace exit scams.

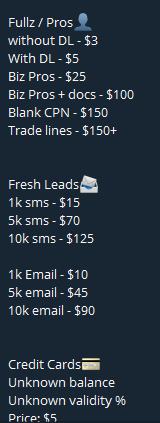

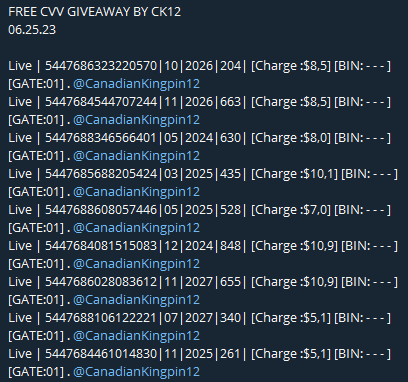

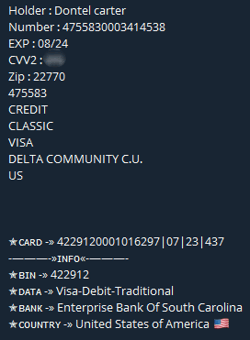

The threat actor had advertised hacking activities, such as:

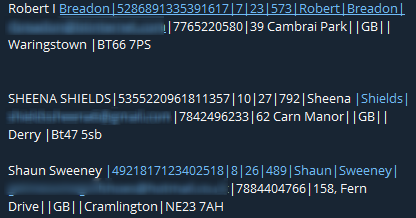

By tracing the threat actor’s identity as it is registered on a Dark Web forum, we find the threat actor’s email address is:

Similar project: WormGPT

Similar to FraudGPT, WormGPT launched on July 13, 2023. These kinds of malicious alternatives to ChatGPT continues to attract criminals and less tech-savvy ne’er-do-wells to target victims for financial gain.

Criminals will not stop innovating — so neither can we

As time goes on, criminals will find further ways to enhance their criminal capabilities using the tools we invent. While organizations can create ChatGPT (and other tools) with ethical safeguards, it isn’t a difficult feat to reimplement the same technology without those safeguards.

In this case, we are still talking about phishing, which is the initial attempt to get into an environment. Conventional tools can still detect AI-enabled phishing and more importantly, we can also detect subsequent actions by the threat actor.

Implementing a defense-in-depth strategy with all the security telemetry available for fast analytics has become all the more essential to finding these fast-moving threats before a phishing email can turn into ransomware or data exfiltration.

For daily threat intelligence updates, visit Netenrich Knowledge Now to learn about the latest global threats impacting organizations.

Related Articles

Subscribe for updates

The best source of information for Security, Networks, Cloud, and ITOps best practices. Join us.